The sale of stolen ChatGPT accounts is on the rise, reflecting the action of criminals in countries where the use of the artificial intelligence chatbot has been blocked by OpenAI

Trade in stolen ChatGPT accounts, especially premium subscriptions, is on the rise, reflecting the actions of criminals in countries where the use of the artificial intelligence chatbot has been blocked by OpenAI, which owns the application. They are successfully bypassing geofencing restrictions and gaining unlimited access to ChatGPT as well as the original user’s queries — which can include both personal and corporate information.

OpenAI imposes geofencing restrictions on users from certain countries when accessing its platform, such as Russia, China and Iran. Geofencing is a “virtual fence” that uses global positioning system (GPS) or radio frequency identification (RFID) to define a user’s geographical boundary. Then, once this “virtual fence” is established, the administrator can set up triggers that send a text message, email alert, or app notification when a mobile device enters (or leaves) the specified area.

The increase in the sale of stolen accounts began in March, according to a team of security researchers at Check Point Research (CPR), the threat intelligence division of Check Point Software, who say they have noticed an “increase in conversations in underground forums related to to the leak or sale of compromised premium ChatGPT accounts.”

Premium accounts refer to ChatGPT Plus, a subscription service that costs $20 per month and gives users access to new features and faster response times compared to those using the free service. While most stolen accounts are offered for sale, some criminals share premium accounts compromised “to advertise their own services or tools to steal the accounts”.

CPR experts say that using the ChatGPT API allows cybercriminals to bypass different restrictions as well as using the chatbot’s premium account. “All this leads to a growing demand for stolen ChatGPT accounts, especially paid premium accounts. In the underworld of the dark web, where there is demand, there are smart cybercriminals ready to seize the business opportunity,” says Sergey Shykevich, Threat Intelligence Group Manager at CPR.

According to him, cybercriminals often exploit the fact that users recycle the same password across multiple platforms. By using this knowledge, hackers load sets of email and password combinations into dedicated software — also known as account checker or, in English, account Checker — and execute an attack against a specific online platform to identify the sets of credentials. that correspond to the login on the platform. A final account takeover occurs when a cybercriminal takes control of an account without the authorization of the account holder.

In one example, CPR researchers found a configuration file for SilverBullet for sale. SilverBullet is a software tool that can be used for data scraping and analysis, automated penetration testing, unit testing through selenium, and more. This tool is also often used by cybercriminals to carry out credential stuffing and account verification attacks against different websites and thus steal accounts for online platforms.

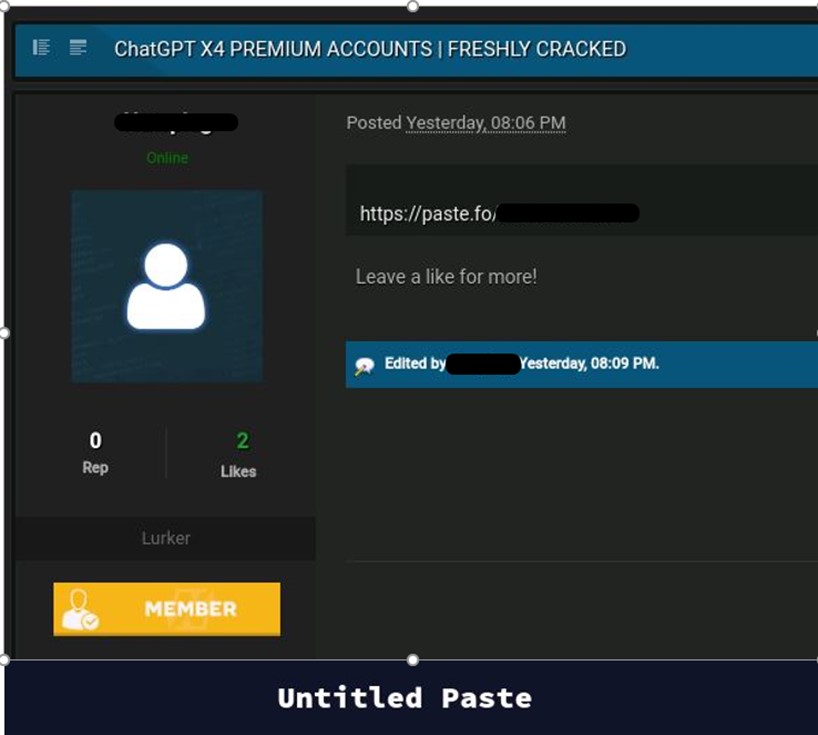

In the following example, a cybercriminal shared four stolen ChatGPT Premium accounts. The way these accounts were shared and their structure led CPR to conclude that they were stolen through a ChatGPT account verifier.

Cybercriminal sharing four premium ChatGPT accounts

In the specific case, CPR identified the cybercriminal by offering a SilverBullet configuration file that allows verification of a set of credentials for the OpenAI platform in an automated way. This allows them to steal accounts on a massive scale. The process is fully automated and can start between 50 and 200 scans per minute (CPM). Furthermore, it supports proxy implementation which in many cases allows bypassing different protections on websites against these attacks.

Another cybercriminal who only focuses on fraud against ChatGPT products, even calling himself “gpt4”, offers for sale not only ChatGPT accounts, but also the configuration of another automated tool that checks the validity of a credential.

Furthermore, on the last 20th of March, an English-speaking cybercriminal started advertising a ChatGPT Plus lifetime account service with 100% satisfaction guaranteed. Regular ChatGPT Plus account lifetime upgrade (opened via email provided by buyer) costs $59.99 (while OpenAI’s original legitimate price for these services is $20 per month). However, to reduce costs, this clandestine service also offers the option to share access to the ChatGPT account with another cybercriminal for US$24.99 for life.

As in other illicit cases, when the threat operator provides some services at a price significantly lower than the original legitimate one, CPR assessed that payment for the upgrade is made using previously compromised payment cards.

ChatGPT Plus underground lifetime account service

Source: CisoAdvisor